Visit us at all about automation 2024!

At all about automation 2024 in Straubing, visitors can experience how Roboception’s AI-based image processing solutions are advancing automation.

On this page you will find the latest information about our products, customer stories or events, educational articles and other exciting news about the company.

At all about automation 2024 in Straubing, visitors can experience how Roboception’s AI-based image processing solutions are advancing automation.

At LogiMAT 2024 in Stuttgart, visitors can experience how Roboception’s AI-based image processing solutions are advancing intralogistics.

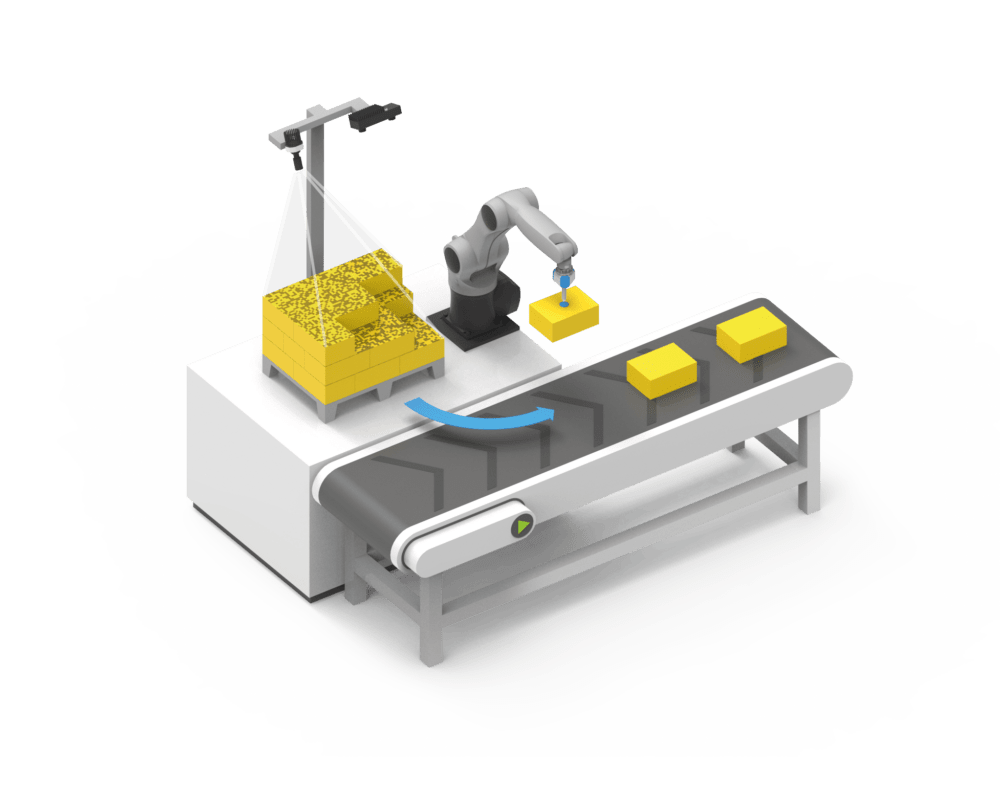

Roboception launches +Match extension for BoxPick: Easy handling of rectangular products, brochures or books with different prints.

Roboception hosts a workshop and an insight session at ERF 2024: Obtaining Good Data for Agile Production, Logistics and Lab Automation.

Roboceptions hosts a joint workshop for ESRs and their supervisors in their Munich office as activity in the 5GSmartFact EU-project.

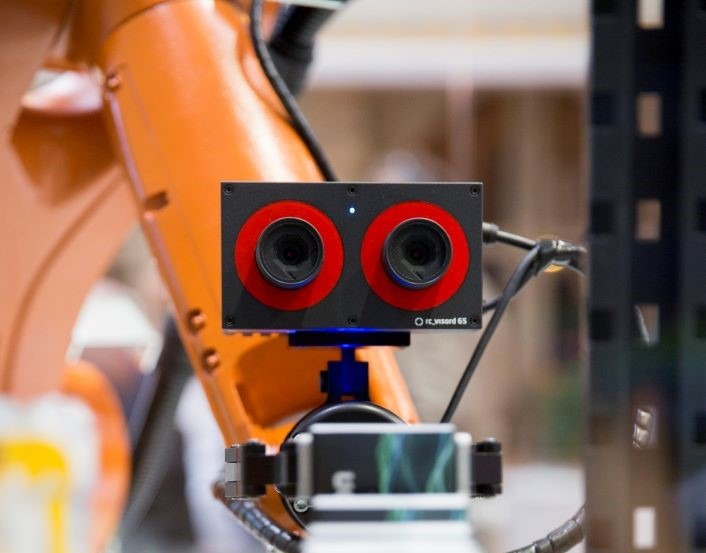

Roboception launches the intelligent robot vision platform rc_visard NG, that offers integrators and end users an adaptable and user-friendly solution.

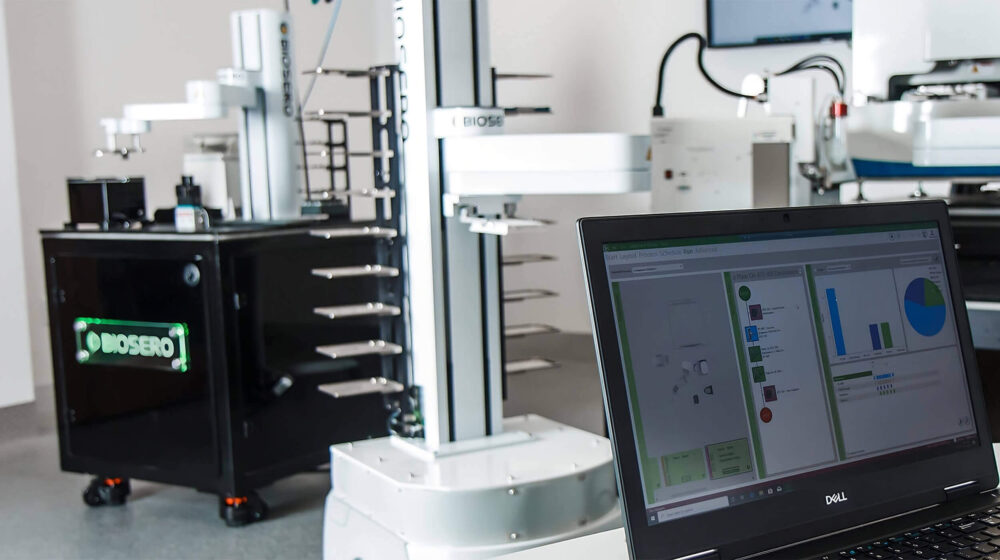

Explore the success story of lab automation specialist Biosero, Inc. as they overcome challenges in developing a precise mobile robot solution for labware transportation. Discover how they achieved 1 mm localization accuracy with Roboception’s rc_visard and TagDetect software module, turning a challenging project into a triumph.

Roboception and voraus robotik link application-oriented perception solutions with robot operating system for flexible automation solutions

Discover our cutting-edge robot vision solutions at automatica 2023 and join us at the KUKA booth A4.230 to discuss your robot vision!

Following their positive experiences with Roboception’s solutions in previous projects, Danfoss Drives A/S turned to us again, when they needed to improve an automated kitting set-up.

At ERF 2023 in Odense, Roboception could provide valuable insights to the robotics industry with the workshop ‘Good Data in Agile Production, Logistics and Lab Automation’

Robotic perception of the environment, processes and humans plays an essential role within the ODIN project. Roboception’s role in ODIN is to…