3D Robot Vision Helps Reduce Risk and Increase Production Volume

How the vision-based automation of loading and unloading massive aluminum casts reduces accident risks and leads to ~37% increased production volume

Automated kitting cells are all the rage: Automating labor-intensive, yet low value-add pick-and-place operations (such as compiling a selection of items into pre-defined kitting trays) enables manufacturers to optimize processes and use their workforce more effectively. However, a reliable, efficient performance is key – and can be optimized using a robot vision system.

YES! (all uppercase and with an exclamation mark) was the response from Morten Hansen, Manufacturing Technology Engineer at Danfoss Drives A/S, when asked whether the company planned to use Roboception’s robot vision products in the future.

Danfoss was introduced to our robot vision when one of their integrators resorted to an rc_visard and the SilhouetteMatch software: QRS – Quality Robot Systems implemented a feeding solution in which a robot recognizes and moves up to 100 different components without manual intervention. [Read more about this use case here…]

“After the successful implementation of this feeding solution, we looked to Roboception first when we needed to improve a vision-based pick-and-place solution that we had already set up in one of our production lines,” says Morten Hansen: “This automated kitting cell just wasn’t performing to our expectations.”

The issue at hand: In an automated kitting set-up, a robot compiles an assortment of different parts. The robot picks them directly from the supplier’s pallets, into differing trays. Initially, a robot-mounted 2D camera was used to identify the parts, and to pick-and-place them.

However, as soon as the positioning of the parts would vary – e.g. if a palette was slightly tilted, its contents were even slightly shifted or a supplier maybe changed their way of packing the parts – the set-up would run into problems:

“We simply had far too much downtime and engineering effort in the original set-up, and the cycle time wasn’t great either,” Hansen recalls. “With Roboception’s robot vision solution, we were able to add a third dimension into our process, making it significantly more robust and more flexible at the same time.”

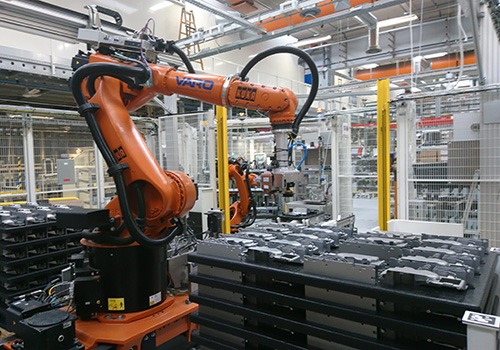

Two rc_viscores were mounted on rails above the cell. They are coupled with an rc_cube that runs both sensors, the rc_reason CADMatch software and some individual sorting strategies. AprilTags and a tailored software module in the rc_cube’s UserSpace (with no additional computing resources necessary) ensure a highly precise localization of the sensors in relation to the robot at all times. The robot vision solution connects to the KUKA robot’s PLC via RestAPI. The robot, too, sits on a linear rail in order to access the full length of the cell.

This implementation is one of the first operational cells using the rc_viscore, the World’s first 12 MPi stereo sensor. Its high-resolution capabilities allows placement of the sensors well above the fairly large workspace:

With two rc_viscores mounted at a height of 2.9 m, the entire 5 x 3 m² floor space of this automated kitting cell is covered. Even smaller parts are detected reliably. In Danfoss’ current set-up, the smallest part has a surface area of 1.5 x 5.5 cm. The sensors detect it with a sub-millimetric precision of 0.2 mm, based on its CAD template. Avoiding an on-arm solution also directly affects the cycle time: The image processing takes place while the robot still executes a previous task.

“The new cell is running robustly and adapts to changes autonomously.

What’s more: We were able to reduce the cycle time from 40 to as little as 25 seconds. A pick-and-place operation per part now takes 7 seconds instead of 12 seconds in the previous set-up.”

Last but not least, the team at Danfoss appreciate the constructive working relationship with the ‘Roboceptioneers’: The robot vision experts built on standardized products and optimized their use with individually tailored extensions. In addition, they were readily available to support with solid simulations and testing prior to the implementation.

Coupled with the intuitive user interfaces and regular software updates, this resulted in a minimized installation time on-site. Equally minimal: The training requirements for the users. Additional or replacement parts are easily integrated into the automated kitting process using CAD-based templates. And once the first cell was successfully installed, it can now be replicated 1-1 without any additional engineering effort.

Overall, Hansen’s whole-hearted “YES!” to our initial question documents how happy Danfoss is with these results. No further details necessary, he must have thought – rather, went on to prove his point: Building on some elements developed in this application (e.g. the tailored Software Module in the UserSpace, or the CADMatch detection templates), the next joint projects are already in the making.

Danfoss Drives is a global leader in AC/DC and DC/DC power conversion, as well as variable speed control for electric motors. With the World’s largest portfolio of power converters, VLT® drives, and VACON® drives at their fingertips – and backing from a partner whose legacy has been built on decades of passion and experience – their customer’s journey to a better future is only just beginning.

Given the large workspace and the variety of objects, (including fairly small ones), two rc_viscores with high-resolution 12MPi capacity are mounted on rails above the cell. Their on-board pattern projector ensures a low sensitivity to lighting conditions.

One single computing resource runs both rc_viscores, the CADMatch software module and some individual sorting strategies. In addition, the rc_cube‘s UserSpace contains some tailored software elements that support the application.

The rc_reason CADMatch Module relies on an object‘s CAD data. It enables the robotic system to reliably pick a variety of objects from unmixed load carriers. Furthermore, it easily copes with changes in the object‘s position and orientation.

How the vision-based automation of loading and unloading massive aluminum casts reduces accident risks and leads to ~37% increased production volume

How a once tedious manual assembly process was reliably automated while minimizing the required floor space.

How adding a robot vision component enables precise pick-and-place for automated machine tending with 100+ different parts

How adding a robot vision system led to increased robustness and 50% reduced cycle time in refractory bricks production processes

How the vision-based automation of loading and unloading massive aluminum casts reduces accident risks and leads to ~37% increased production volume

You would like to find out whether our portfolio is suitable for your robotic application? Simply request a feasibility study free of charge, and get a live demo of our products.

Would you like to try out one of our sensors and software solutions? Our Try-&-Buy-option gives you the chance to test our products before you decide, and to be sure you make the right choice for your application.